2021/4/16 结果反馈与讨论记录

2021/4/16

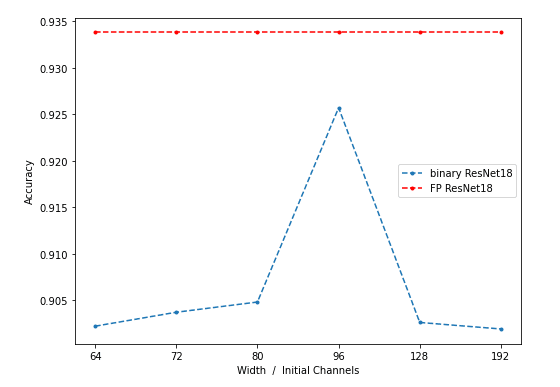

Channel Widening Exp

- 实验条件(?):XNOR-ResNet18, CIFAR10数据集,200 epoch run

- Accuracy数据计算:后10 epoch validation accuracy算术平均。

- 结论:加宽通道在一定范围内可以提高accuracy,但是难以到达FP ResNet18的精度,且通道过宽对accuracy有害。

- 可能原因:optimizer设置问题,比如weight decay,可能存在overfitting.

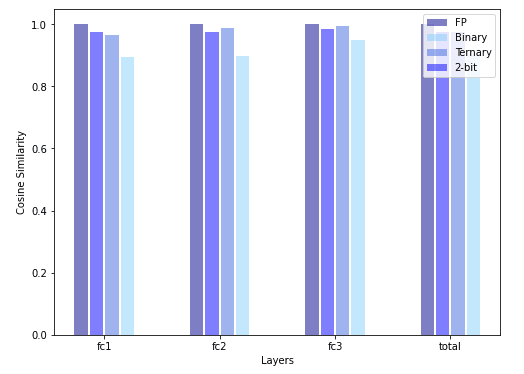

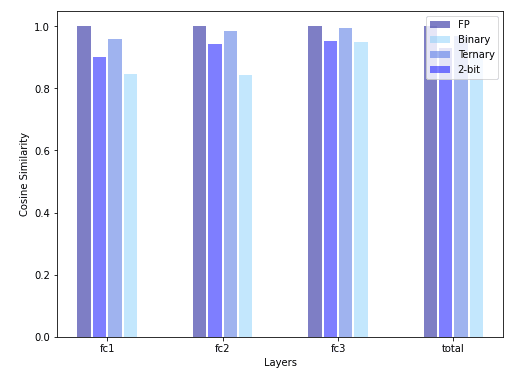

BinaryDuo CDG Validation

- 实验条件:3层MLP(32/32/32), 输入数据服从N(0,1),维度为(1000000,32) epsilon=0.1

raw data:

s_full = [1.0000, 1.0000, 1.0000, 1.0000]

s_bina = [0.8934, 0.8968, 0.9629, 0.9046]

s_tern = [0.9653, 0.9874, 0.9933, 0.9747]

s_2_b = [0.9752, 0.9755, 0.9847, 0.9747]

- 实验条件:3层MLP(32/32/32), 输入数据服从N(0,1),维度为(1000000,32) epsilon=0.01

raw data:

s_full = [1.0000, 1.0000, 1.0000, 1.0000]

s_bina = [0.8468, 0.8430, 0.9488, 0.9205]

s_tern = [0.9586, 0.9841, 0.9945, 0.9672]

s_2_b = [0.9009, 0.9419, 0.9516, 0.9303]

陈老师:

- loss下降

- NES(cheap, apply to real model(resnet18 cifar

- CDG as upperbound, STE as lowerbound

- loss descendence plot (variance/mean)

- convergent low; less sample yet better estimation

- 比较estimator(少样本loss下降快)

- 预期产出:算法(特殊性:有grad/NES等传统算法没有grad)

- ResNet18 training accuracy -> overfitting?

TO-DO

- WRPN related:

- 调一下2x channels / 3x channels的情况,可以看一下training accuracy和training loss(有没有出现过拟合,陈老师说channel++ accuracy会严格变好?)

- NES related:

- 实现NES,在toy model上看看效果?可以画loss随batch的下降曲线,CDG作为真实gradient(的估计)作为upper bound,STE作为被比较的对象(or lowerbound)(文章我还没有读过…)

- 将NES用到ResNet上(这里因为计算开销应该就用不了CDG了,直接和STE比?)

- Framework related:

- 比一下awnas和现在实现的training setting,看看训不上去的原因在哪

- 把接口改得稍微好看点

- BMXNet baseline related:

- 有卡挂上去调完块内结构的model/lr?